“How can you not be pedantic about baseball?” –Effectively Wild

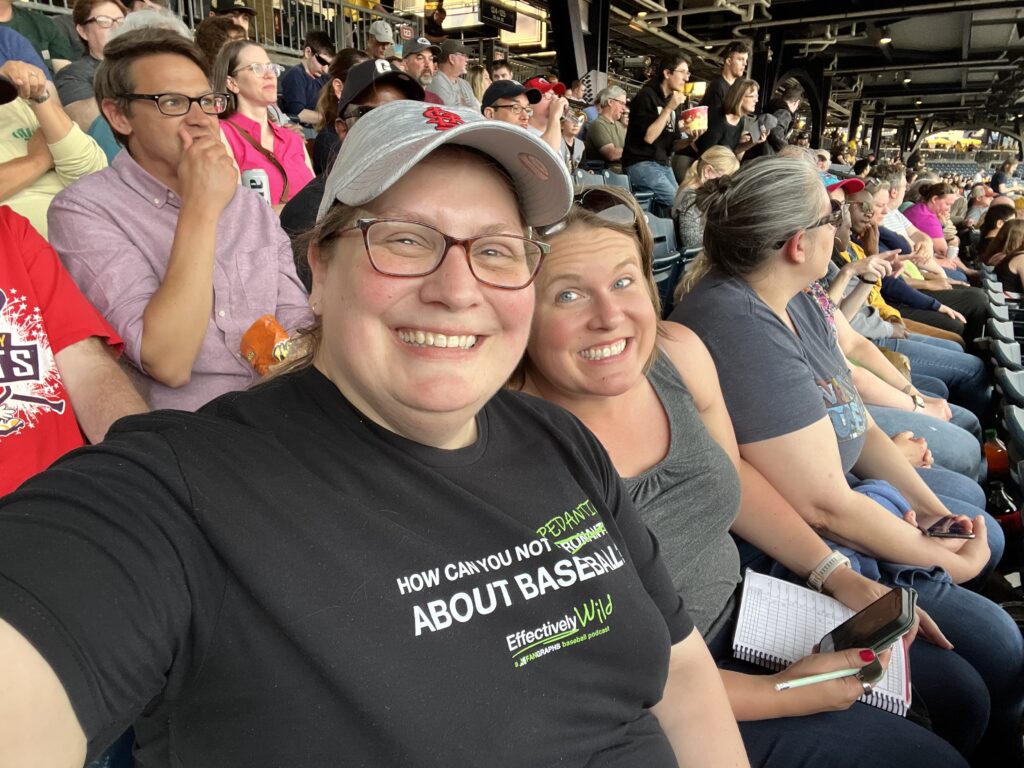

Last week was the 38th annual conference for NASIG. It was held in Pittsburgh, PA, at the same location where we met for our 34th annual. There was a smaller crowd, but as you can see above, this time we were able to attend a baseball game at PNC Park.

I’ve been a member of NASIG my whole career, attending every conference since 2002. But, just in case you are new here, or maybe need a refresher, “NASIG is an independent, non-profit organization working to advance and transform the management of information resources. [The] ultimate goal is to facilitate and improve the distribution, acquisition, and long-term accessibility of information resources in all formats and business models.”

In years past, I often took meticulous, almost transcription level notes of conferences sessions to share here. I fell out of practice with that even before the pandemic, but that certainly didn’t help. One great thing about NASIG is that all of the sessions will be included in the Proceedings, which are now Open Access and will be available in the next year.

I took quite a few offline notes for myself — a practice I’ve been trying to adopt for all work meetings now that my memory recall is declining while the number of candles on my birthday cake increases. I want to share a few highlights of things that I found valuable, and maybe you will, too.

Licensing stuff

We implemented Alma in June 2020, and until April 2022, I was attempting to handle all Acquisitions and Electronic Resources functions. I had no time for things that seemed pointless, and the license module was top of that list. There were so few default fields and I didn’t have the understanding to even look for the documentation that might have alerted me to how I could choose other (more useful) fields to be used. However, an off-hand comment at the Alma tech services user group on day one of the conference got me digging, and now I have plans to work with our Electronic Resources Librarian to flesh out the license information stored in Alma.

On day two of the conference, I attended the licensing workshop led by Claire Dygert. I was thrilled to have this opportunity, as Claire is one of my favorite NASIG people over the years, and she’s done a lot of great work in the areas of licensing and negotiation. Two things I’m taking away from it is plans to develop a template of terms we definitely want included in our license agreements, and a workflow for requesting price quotes well in advance of renewals as the opener for negotiations from a principled perspective.

Collections stuff

One of the sessions I attended on the third day of the conference was about documenting post-cancellation access to journals. My current institution has canceled relatively few journal subscriptions over the years, and I have not had a particularly thorough workflow for this. One big revelation for me was the order history tab in EBSCOnet, which I’m quite certain I’d never looked at before. No more guessing when our online subscription began based on the fund code we used (which is sometimes inconsistent)!

(I have not been great at documentation in the past, but due to the aforementioned loss of memory recall at the level I used to have, I’m working on that. I also attended part of a session about documentation in general that I peaced out on early when the first presenter was getting too much in the weeds of their particular situation. I heard the second presenter had more concrete workflows/ideas, so I look forward to reading that in the proceedings.)

My next steps will be to develop a workflow and documentation for recording this information in Alma. Possibly in conjunction with the license project I noted before.