Speaker: Herbert Van de Sompel, Los Alamos National Laboratory

Scholarly communication has transitioned from paper-based system to a system of digitizing paper to a digital native and networked system. His group is doing research on this transition and what it means.

He has worked on several relevant projects over the years. MESUR looks at metrics for scholarly objects. Memento makes versions of resources accessible. Hiberlink is time travel for the scholarly web. ResourceSync is for moving resources from one place to another.

The common denominator is using the web for scholarly communications. It’s about interoperability across scholarly systems and information using the web. They focus on making it more accessible for machines, which in turn makes it easier for humans.

Will we be able to visit the scholarly record in the future like we used to be able to do in the paper-based system?

In the paper-based system, it is easy to revisit the original publication context of the article. You may have to visit several libraries to do so, but it’s possible to completely reconstruct it years after the publication of the original article.

In the current system of web-based journals, we not only have references to the context, but also links to them. We don’t have to travel to visit the referenced articles. Libraries fell out of the responsibility for archiving this content, so special organizations like Portico emerged. But, can we still revisit the entire publication context in the future under this model?

Article to article links are brittle and can break, particularly with mergers and acquisitions of publishers. DOI and other persistent identifiers are a work-around, but it’s not perfect, and it’s geared more towards being easy for humans than for computers. The landing page for DOI makes sense to humans, but machines can’t tell which one is the real thing that can be archived. We need a machine-readable splash page, too.

In the current system, we rely on special-purpose archives for long-term preservation when the general commercial systems (i.e. publisher websites) close. But, not everything is being archived, and it’s the stuff that isn’t as much in danger, such as those from large publishers that are easy to grab from the web. And what we’re collecting is journal-based, which doesn’t capture any of the scholarly web content that is most in danger of disappearing over time.

It gets worse.

Our online scholarly content is now linking to more than just articles. Software, data, project blogs, and other content created by researchers in their work. These things are not preserved like the article content. The software can change and the data can disappear. Over time, the context of this article is lost. Hiberlink seeks to fix this.

Reference rot is link rot plus content drift. He quantified reference rot for PubMed content by looking at how much had been archived within a 14 day window prior/post-publication, and most of it wasn’t. Of the parts that had been, only a little experienced reference rot. The un-archived content was almost entirely rotten.

This is not just STEM-H. The New York Times recently published an article about law journals and Supreme Court decisions. There is a dramatic percentage of link and reference rot. This also exists outside of scholarly/academic content, such as in Wikipedia references.

There are limitations with crawler-driven web archiving, particularly when embedded content is archived at different times from the HTML. This would be really bad in the scholarly context.

Librarians need to increase web archiving projects with focused crawls. Start with your own institutional web pages (projects). There are subscription-based services like Archive-It. SiteStory is open-source software for self-archiving that his team developed.

In the course of the production of an article, there are several intervention points for self-archiving references. There’s a prototype Hiberlink plugin for Zotero that will auto-archive when the author bookmarks a site. perma.cc helps authors and journals create permanent archived citations in their published work which they can use as persistent links in their papers.

The Memento plug-in for Chrome will allow you to view the archived versions of websites.

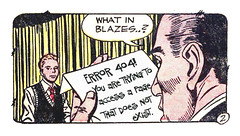

When we link to archived resources, the current practice is to replace the original URI with the URI of the snapshot in the archive. This prevents visiting the original URI if desired, and prevents the use of the snapshots in other web archives because they use the original URI as key. It also requires the permanent existence and uptime of the archive. We’re just replacing one link rot problem with another.

Many commercial and non-commercial web archiving services for links have come and gone, taking their URIs with them. He is starting to work on a way to augment the links to provide temporal context. The project is unofficially called 404 No More.

Research data is a huge component of web-based scholarly communication. There is discussion of looking at software as scholarly communication (GitHub). Presentations are a part of this, so we need to think about sites like SlideShare as a part of the scholarly record. Wikis are increasingly used for scholarship. Electronic lab notebooks augment experiments and allow them to be shared online. MyExperiment is a social portal to share scientific workflows.

The research process, not just its outcome, is becoming visible on the web, but contain many objects we don’t know how to archive. There is increased use of common web platforms for scholarship that give the impression of archiving, but are merely a record. The communicated objects are heterogeneous, dynamic, compound, inter-related and on the web, and archiving must take these object characteristics into account.

What is the scholarly record? Where does it start? Where does it end?