Speakers: Ros Raeford & Beverly Dowdy

Users were unhappy with eresource management, due in part to their ad hoc approach, and they relied on users to notify them when there were access issues. A heavy reliance on email and memory means things slip through the cracks. They were not a train wreck waiting to happen, they were train wreck that had already occurred.

Needed to develop a deeper understanding of their workflows and processes to identify areas for improvement. The reason that earlier attempts have failed was due to not having all the right people at the table. Each stage of the lifecycle needs to be there.

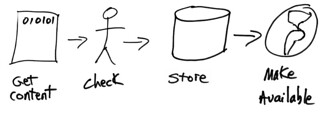

Oliver Pesch’s 2009 presentation on “ERMS and the E-Resources Lifecycle” provided the framework they used. They created a staff responsibility matrix to determine exactly what they did, and then did interviews to get at how they did it. The narrative was translated to a workflow diagram for each kind of resource (ebooks, ejournals, etc.).

Even though some of the subject librarians were good about checking for dups before requesting things, acquisitions still had to repeat the process because they don’t know if it was done. This is just one example of a duplication of effort that they discovered in their workflow review.

For the ebook package process, they found it was so unclear they couldn’t even diagram it. It’s very linear, and it could have a number of processes happening in parallel.

Lots of words on screen with great ideas of things to do for quality control and user interface improvements. Presenter does not highlight any. Will have to look at it later.

One thing they mentioned is identifying essential tasks that are done by only one staff. They then did cross-training to make sure that if the one is out for the day, someone else can do it.

Surprisingly, they were not using EDI for firm orders, nor had they implemented tools like PromptCat.

Applications that make things work for them:

JTacq — using this for the acquisition/collections workflow. I’ve never heard of it, but will investigate.

ImageNow — not an ERM — a document management tool. Enterprise content management, and being used by many university departments but not many libraries.

They used SharePoint at a meeting space for the teams.